原文:http://davidaq.com/tutorial/2014/11/20/ffmpeg-commands.html

FFmpeg是一个开源免费跨平台的视频和音频流方案,属于自由软件。 别看这东西只有几十Mb,但却是个能格式转换、剪辑、播放几乎无所不能的命令行软件。 就如格式工厂,其核心也是FFmpeg。 在专业领域常被部署在服务端,用以做云端视频相关服务。 如七牛云存储就是利用FFmpeg来完成各种格式转换的。 其官方网址为:FFmpeg.org。 在那里可以下载到各种主流电脑平台的FFmpeg程序。

FFmpeg主要包含四个程序:

ffmpeg主要用于对媒体文件的内容进行操作,如格式转换等,是最主要的部件ffplay简易播放器,虽然没有什么UI,但是能播放各种格式的视频ffprobe用于探查媒体文件的属性,如meta标签等,可以选择输出JSON或XML格式ffserver流媒体服务器,不可多得的免费流媒体服务器软件,可用于架设视频直播

FFmpeg除了提供可运行程序,还提供一套libav多媒体处理C库,可集成到别的软件当中提供多媒体文件解码、编码等功能。

对于一些比较专业的命令,本文也不会过多叙述,因为那需要更多的多媒体文件基础知识才能理解。 另外注意,这里讲的是正统的FFmpeg,而不是Debian搞出来的分支LibAV,里面那个ffmpeg(Ubuntu内置的)。

视频篇

以下命令主要针对视频文件操作

格式转换

ffmpeg最常用功能就是格式转换,在这里要特别提的是,音、视频文件格式有两个容器格式(如mov、flv)与编码格式(如H.264)。 很多人知道前者,却不知道后者,二者的关系与异同可在别处查到,不在此赘述。 简单的格式转换如下:

ffmpeg -i input.flv output.mp4

上面的命令就把一个flv文件转换成了一个mp4文件,其中-i xxx.xxx指定的输入文件,单独写的文件名指定输出文件路径。

一般FFmpeg会根据文件格式选择最合适的容器格式与编码格式,也可以手动指定。 常见的用例是需要一个保留alpha通道的视频,通常会使用mov容器格式,png编码格式,但是FFmpeg会默认使用H.264编码格式(不支持透明)。 如此命令如下:

ffmpeg -i input.flv -c:v png output.mov

想要知道自己的FFmpeg都支持哪些容器格式,使用命令ffmpeg -formats。 看都支持哪些编码格式,使用命令ffmpeg -codecs。

特别强调,如果处理文件不是图片,不要让输入与输出文件相同

使用vaapi硬件编解码转码

1.默认方式

ffmpeg -vaapi_device /dev/dri/renderD128 -i input.mp4 -vf 'format=nv12,hwupload' -c:v h264_vaapi output.mp4

2.输入输出均支持vaapi硬件编解码

ffmpeg -hwaccel vaapi -hwaccel_output_format vaapi -hwaccel_device /dev/dri/renderD128 -i input.mp4 -c:v h264_vaapi output.mp4

3.输入文件不支持vaapi解码,使用vaapi编码

ffmpeg -init_hw_device vaapi=foo:/dev/dri/renderD128 -hwaccel vaapi -hwaccel_output_format vaapi -hwaccel_device foo -i input.mp4 -filter_hw_device foo -vf 'format=nv12|vaapi,hwupload' -c:v h264_vaapi output.mp4

尺寸变换

想把大而高清的视频,变成尺寸较小,文件大小也更小的视频也是个普遍用例。 下面这个命令就可以完成改变尺寸的任务,对图片文件也有效。

ffmpeg -i input.mp4 -s 640x360 output.mp4

上面的命令由-s 640x360定义了输出视频的画面尺寸会是640×360。

使用vaapi硬件resize

vf 'format=nv12,hwupload,scale_vaapi=w=1920:h=1080'剪切

只想取视频的某一部分也有很方便的命令:

ffmpeg -i input.mp4 -ss 5 -t 10 output.mp4

上面的命令-ss 5指定从输入视频第5秒开始截取,-t 10指明最多截取10秒。 但是上面的命令可能会比较慢,更好的命令如下:

ffmpeg -ss 5 -i input.mp4 -t 10 -c:v copy -c:a copy output.mp4

上面的命令把-ss 5放到-i前面,与原来的区别是,这样会先跳转到第5秒在开始解码输入视频,而原来的会从开始解码,只是丢弃掉前5秒的结果。 而-c:v copy -c:a copy标示视频与音频的编码不发生改变,而是直接复制,这样会大大提升速度,因为这样就不需要完全解码视频(视频剪切也不需要完全解码)。

换一条例子

-ss:截取开始时间点, -t:要截取的视频长度(15秒)

ffmpeg -i yourvideoname.mp4 -ss 00:00:00 -codec copy -t 15 outputclip.mp4

图片序列与视频的互相转换

ffmpeg可以把一组图片转换成一个视频(可以把gif动画也当成一种视频格式),反之亦可。命令如下

ffmpeg -i %04d.jpg output.mp4

ffmpeg -i input.mp4 %04d.jpg

第一行命令是把0001.jpg、0002.jpg、0003.jpg等编码成output.mp4,第二行则是相反把input.mp4变成0001.jpg……。 %04d.jpg表示从1开始用0补全的4位整数为文件名的jpg文件序列。 如果想要序列文件名为hello_00001.png等等的话,就是hello_%05d.png

如果编码视频的时候还想加入声音,则如下这般添加一个输入文件:

ffmpeg -i input.mp3 -i %04d.jpg output.mp4

改变视频FPS

FFmpeg可以用于降低或提高视频的帧率,因为信息丢失不可逆法则,提高帧率只会简单地让某些帧的画面多重复一次或多次,所以提高帧率不会提高画质。

ffmpeg -i input.mp4 -r 30 output.mp4

上面的命令,不论原始视频帧率是多少,输出视频都会是30帧每秒。这种情况之下视频的时间轴不会变化,不会有慢动作或快动作的效果。

ffmpeg -r 30 -i input.mp4 output.mp4

上面这种条换顺序之后的写法比较有意思,-r 30放在输入文件之前表示影响的时输入文件,而非输出文件。 这样的命令表达的是,把输入文件当做30帧每秒,而忽略它的原始帧率。这样如果原来的视频FPS是25,被视作30之后,输出的视频会有快进的效果。 这个命令没有指定输出视频的FPS,默认会与输入文件保持一样,可以与本节第一个命令和在一起,写两个-r参数,第一个指定输入FPS,第二个指定 输出FPS即可既控制播放速度,又控制输出帧率。

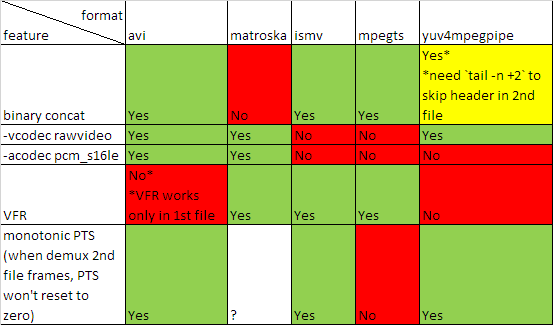

H264视频首尾拼接

如果确定输入文件都是H264编码,且尺寸、帧率等都相同,先把源视频转换成用于直播的ts格式。 然后直接对多个ts文件进行文件级的拼接,然后在转换回到目标格式。这个过程中,不会发生格式转换,所以非常迅速。

ffmpeg -i q.mp4 -c copy -bsf h264_mp4toannexb q.ts

ffmpeg -i r.mp4 -c copy -bsf h264_mp4toannexb r.ts

ffmpeg -i "concat:q.ts|r.ts" -c copy -bsf aac_adtstoasc qr.mp4

音频篇

以下命令主要用于音频操作。有许多上面已经给出的视频操作,比如格式转换,剪切等也可适用于音频。大部分视频也都包含音频,所以下面的命令 往往可以与视频命令混合适用。

提取音乐中的封面图片

有些音乐文件包含专辑封面图片在里面,可以用如下命令简单取出。命令从字面意义来讲,就是把MP3格式转换成JPEG格式了。

ffmpeg -i input.mp3 cover.jpg

音乐有损压缩

在某些场合下,比如在给网站做背景音乐,或音乐网站提供预览版音乐时,会选择以牺牲音频质量为代价降低文件大小,让网络播放更顺畅。 一个典型的压缩命令如下:

ffmpeg -i input.mp3 -ac 1 -ar 32k -bit_rate:a 128k output.mp3

上面命令做了三件事情:

-ac 1指定只保留一个声道,所有声道都融合成一个(这里有个FFmpeg的bug,输出音量会变小)。-ar 32k表示采样率改为32000,通常的高保真音频都是48K左右,这个数值变小,会裁剪掉高音部分,32K会裁掉不少高音,不过普通人如果没个对比,听不出什么问题。 如果音频文件不是音乐,而是人声内容(比如广播),则可以打手一挥设置成22k或16k(电话是16k)-bit_rate:a 128k设置的时音频比特率,如果是-bit_rate:v就成了视频比特率,128K表示,输出文件大概每秒钟的内容会有16KB左右的文件大小,需要至少128kbps的网络才能流畅播放 128K算是比较理想的比特率,文件小,音频质量损失又不是特别明显(对于普通人)

苹果系统的问题

经过FFmpeg处理的音频文件,在苹果的系统(包括OSX、iOS)以及苹果的播放器(iTunes、QuickTime)上往往会显示错误的长度时间。 这是个FFmpeg潜在的Bug,不过可以通过添加参数规避:

ffmpeg -i input.mp3 -write_xing 0 .... output.mp3

这样输出的文件在苹果产品上就会表现正常。原先不正常的文件,亦可以使用这个参数再经过一次ffmpeg处理来解决长度问题。

ffplay

ffplay是FFmpeg家族中的一个媒体文件播放器,可以播放许多种格式,不过与其说它是个完整的播放器,不如说这是个DEMO程序, 用来演示如何使用FFmpeg提供的解码接口来做播放器。它的命令很简单,通常播放一个正常的媒体文件只需要如下

ffplay target.mp4

ffplay可以接受参数,相当于ffmpeg程序里-i之前的参数,用来改变输入文件的输入方式,比如通过改变FPS造成快进或慢镜头的效果。

ffprobe

ffprobe可以非常方便地用于检测媒体文件的一些隐藏信息,通常只有更深层次的专业人员才会用到。一个典型的命令如下:

ffprobe target.mp4 -show_format -show_streams -print_format json -loglevel fatal

Use -safe 0 and -protocol_whitelist file,http,https,tcp,tls arguments. Full example at very bottom.

I was trying to use FFmpeg’s concat demuxer like so:

# inputs.txt

file 'http://www.example1.com/video1.mp4'

file 'https://www.example2.com/video2.mp4'

ffmpeg -f "concat" -i "./inputs.txt" -codec "copy" "./concated.mp4"

First I got the error:

[concat @ 0x00] Unsafe file name 'http://www.example1.com/video1.mp4'

./inputs.txt: Operation not permitted

This was solved by adding the -safe 0 argument. Then I got the error:

[http @ 0x00] Protocol not on whitelist 'file,crypto'!

[concat @ 0x00] Impossible to open 'http://www.example1.com/video1.mp4'

./inputs.txt: Invalid argument

I thought I would be able to solve this by simple adding -protocol_whitelist file,http,https but then the error became:

[tcp @ 0x00] Protocol not on whitelist 'file,http,https'!

[concat @ 0x00] Impossible to open 'http://www.example1.com/video1.mp4'

./inputs.txt: Invalid argument

I did not understand why my HTTP protocol input was still being rejected. http was clearly in the protocol whitelist. Then I noticed the small diference between the previous two errors. Look at the very first word in those errors. http vs tcp. I realized that the first word in brackets before the error was the protocol that was being rejected.

The solution is to also add tcp to the protocol whitelist as well (also tls if you want to support HTTPS). Here was my final command:

ffmpeg -f "concat" -safe "0" -protocol_whitelist "file,http,https,tcp,tls" -i "./inputs.txt" -codec "copy" "./concated.mp4"

Using VAAPI’s hardware accelerated video encoding on Linux with Intel’s hardware on FFmpeg and libav

https://gist.github.com/Brainiarc7/95c9338a737aa36d9bb2931bed379219

Hello, brethren 🙂

As it turns out, the current version of FFmpeg (version 3.1 released earlier today) and libav (master branch) supports full H.264 and HEVC encode in VAAPI on supported hardware that works reliably well to be termed “production-ready”.

Assumptions:

Before taking on this manual, the author assumes that:

- The end-user can comfortably install and configure their Linux distribution of choice.

- The end user can install, upgrade, downgrade and resolve both conflicts and dependency resolution of packages on his/her distribution’s package manager.

- That the user is comfortable with the Linux terminal, and can navigate through it.

- Basic competence on the shell, such as reading man files, using a text editor of choice, manipulating file operations on the same, etc is assumed.

And as an indemnity clause, I, the author, will not be liable for any damage, implied or otherwise, to your files, hardware or the stability of your machine as a consequence to using these instructions to achieve a similar feat as described in this gist.

Implications:

It means that when you’re encoding content for use with your blogs or some fancy youtube download, you can do it much, much faster on hardware with lower processor utilization (so you can multi-task) , lesser heat output and, as a plus, is significantly faster (As tested on my end, ~8.7x for 1080p and ~4.2x for 4k encodes with reference media) compared to a pure, software-based approach as offered by libx264 and similar implementations, albeit at an acceptable quality compromise.

Here goes:

First, you will need to build ffmpeg (and libav,as per your preferences) with appropriate arguments. –enable-vaapi switch should be enough, though.

Here are my build options (Note that I load ffmpeg and libav via the module system):

FFmpeg’s module files are here, and as more versions are compiled, more modules will be added. Libav’s module files are here, and as more versions are compiled, more modules will be added.

FFmpeg‘s configuration switches used:

./configure --enable-nonfree --enable-gpl --enable-version3

--enable-libass --enable-libbluray --enable-libmp3lame

--enable-libopencv --enable-libopenjpeg --enable-libopus

--enable-libfaac --enable-libfdk-aac --enable-libtheora

--enable-libvpx --enable-libwebp --enable-opencl --enable-x11grab

--enable-opengl --cpu=native --enable-nvenc --enable-vaapi

--enable-vdpau --enable-ladspa --enable-libass --enable-libgsm

--enable-libschroedinger --enable-libsmbclient --enable-libsoxr

--enable-libspeex --enable-libssh --enable-libwavpack --enable-libxvid

--enable-libx264 --enable-libx265 --enable-netcdf --enable-openal

--enable-openssl --enable-cuda --prefix=/apps/ffmpeg/git --enable-omx

Libav‘s configuration switches used:

./configure --prefix=/apps/libav/11.7 --enable-gpl --enable-version3

--enable-nonfree --enable-runtime-cpudetect --enable-gray

--enable-vaapi --enable-vdpau --enable-vda --enable-libmp3lame

--enable-libopenjpeg --enable-libopus --enable-libfaac

--enable-libfdk-aac --enable-libtheora --enable-libvpx

--enable-libwebp --enable-x11grab --cpu=native --enable-vaapi

--enable-vdpau --enable-libgsm --enable-libschroedinger

--enable-libspeex --enable-libwavpack --enable-libxvid

--enable-libx264 --enable-libx265 --enable-openssl --enable-nvenc

--enable-cuda --enable-omx

Then run make and make install to build and install the toolkits respectively.

Warning: These options are for reference only, a useful FFmpeg build will require you to install appropriate dependencies for some build options as suited to your environment and platform. Modify as needed. Also see the indemnity clause at the top of this document.

Here are the dependencies I had to install on my end (without acounting for the OpenMAX IL bellagio back-end):

sudo apt-get install yasm ladspa-sdk ladspa-foo-plugins ladspalist libass5 libass-dev libbluray-bdj libbluray-bin libbluray-dev libbluray-doc libbluray1 libmp3lame-dev \ libmp3lame-ocaml libmp3lame-ocaml-dev libmp3lame0 libsox-fmt-mp3 libopencv-* opencv-* python-cv-bridge python-image-geometry python-opencv python-opencv-apps gstreamer1.0-vaapi gstreamer1.0-vaapi-doc libopenjp2-* libopenjp2-7-dev libopenjp2-7-dbg libopenjp3d7 libopenjpeg-dev libopenjpeg-java libopenjpeg5 libopenjpeg5-dbg libopenjpip7 openjpeg-tools libopus-dbg libopus-dev libopus-doc libopus0 libtag1-dev libtag1-doc libtag1v5 libtagc0 libtagc0-dev libopus-ocaml libopus-ocaml-dev libopusfile-dev libopusfile-doc libopusfile0 libvorbis-java opus-tools opus-tools-dbg libfaac-dev libfaac0 fdkaac libfdk-aac0 libfdk-aac0-dbg libfdk-aac-dev libtheora-dbg libtheora-dev libtheora-doc libtheora0 libtheora-bin libtheora-ocaml libtheora-ocaml-dev libvpx-dev libvpx-doc libvpx3 libvpx3-dbg libwebp-dev libwebp5 libwebpdemux1 libwebpmux1 opencl-headers mesa-vdpau-drivers libvdpau-va-gl1 vdpauinfo vdpau-va-driver libvdpau-doc libvdpau-dev libvdpau1 libvdpau1-dbg libgsm-tools libgsm0710-0 libgsm0710-dev libgsm0710mux3 libgsm1 libgsm1-dbg libgsm1-dev sox libsox-dev libsox-fmt-all libsox-fmt-alsa libsox-fmt-ao libsox-fmt-base libsox-fmt-mp3 libsox-fmt-oss libsox-fmt-pulse libsox2 libsoxr-dev libsoxr-lsr0 libschroedinger-dev libschroedinger-doc libschroedinger-ocaml libschroedinger-ocaml-dev libschroedinger-1.0-0 libsmbclient libsmbclient-dev smbclient libspeex-dev libspeex1 libspeexdsp-dev libspeexdsp1 libspeex-ocaml libspeex-ocaml-dev libspeex-dbg libssh-4 libssh-dev libssh-dbg libssh-doc libssh-gcrypt-4 libssh2-1 libssh2-1-dev libwavpack-dev libwavpack1 libxvidcore-dev libxvidcore4 libx265-dev libx265-79 libx265-doc libx264-148 libx264-dev libnetcdf-* netcdf-* libopenal-* openal-info openssl

When done, you may then create and load the appropriate environment modules for both ffmpeg and libav as your choices go. Don’t load both at the same time, though 🙂 (Mark them as module conflicts to ensure that if this is set up on a cluster, library conflicts do not occur when users inadvertently load both of them by accident in the same session).

Now, we get to the interesting bits:

Encoding with VAAPI

You’ll notice that we pass several arguments to ffmpeg as indicated below:

ffmpeg -loglevel debug -hwaccel vaapi -vaapi_device /dev/dri/renderD128 -i "input

file" -vf 'format=nv12,hwupload' -map 0:0 -map 0:1 -threads 8 -aspect

16:9 -y -f matroska -acodec copy -b:v 12500k -vcodec h264_vaapi

"output file"

Let’s break down these arguments to their meaning:

(a) .-loglevel tells ffmpeg to log ffmpeg events as debug output. This will be very verbose, and is completely optional. You can disregard this.

(b). -vaapi_device: This is important. You must select a valid VAAPI H/W context device to which you will upload textures to via hwupload, formatted in the NV12 colorspace. This points to a /dev/dri/render*_ file on your Linux system.

(c). -vf : This is an inbuilt ffmpeg option that allows you to specify codec options/arguments to be passed to our encoder, in this case, h264_vaapi (Remember, we built this when we passed –enable-vaapi at the configuration stage). Here, we tell ffmpeg to convert all textures to one colorspace, NV12 (As it’s the one accepted by Intel’s QuickSync hardware encoder) and to also use hwupload, an ffmpeg intrinsic, that tells the program to asynchronously copy the converted pixel data to VAAPI’s surfaces.

(d). – threads : Specifies the number of threads that FFmpeg should use. By default, use the number of logical processors available on your processor here. On Intel processors that support Hyperthreading, multiply the number of cores your processor has by 2.

(e). -f : Specifies the container format specification you can use. This can be Matroska, webm, mp4, etc. Take your pick (as per your container constraints).

(f). -acodec: Specifies the audio codec to use when transcoding the video’s audio stream. In the example given above, we use ffmpeg’s muxers to copy the audio stream as is, untouched.

(g). -vcodec: Selects the video encoder to use. In this case, we selected h264_vaapi, our key point of interest here.

(h).-hwaccel vaapi: This instructs ffmpeg to use VAAPI based hardware accelerated decode (for supported codecs, see platform limits), and it can drastically lower the processor load during the process. Note that you should only use this option if your hardware supports hardware-accelerated decoding via VAAPI for the source fornat being encoded.

(i). Using the vaapi_scaler in the video filters: It is possible to use Intel’s QuickSync hardware via VAAPI for resize and scaling (when up-or downscaling the input source to a higher or lower resolution), using a filter snippet such as the one shown below:

vf 'format=nv12,hwupload,scale_vaapi=w=1920:h=1080'

You may specify a different resolution by changing the dimensions in =w= and :h= to suit your needs.

See an example of this filter snippet used above in the two-pass example in FFmpeg below.

(j). -hwaccel_output_format : This option should be used every time you declare the -hwaccel method as vaapi , so that the decode stage takes place entirely in hardware. This option generates decode output directly on VAAPI hardware surfaces, speeding up decode performance significantly.

You may confirm supported decode formats on your setup by running vainfo:

vainfo

Sample output on a Haswell testbed:

libva info: VA-API version 0.39.0

libva info: va_getDriverName() returns 0

libva info: Trying to open /usr/lib/x86_64-linux-gnu/dri/i965_drv_video.so

libva info: Found init function __vaDriverInit_0_39

libva info: va_openDriver() returns 0

vainfo: VA-API version: 0.39 (libva 1.7.0)

vainfo: Driver version: Intel i965 driver for Intel(R) Haswell Mobile - 1.7.0

vainfo: Supported profile and entrypoints

VAProfileMPEG2Simple : VAEntrypointVLD

VAProfileMPEG2Simple : VAEntrypointEncSlice

VAProfileMPEG2Main : VAEntrypointVLD

VAProfileMPEG2Main : VAEntrypointEncSlice

VAProfileH264ConstrainedBaseline: VAEntrypointVLD

VAProfileH264ConstrainedBaseline: VAEntrypointEncSlice

VAProfileH264Main : VAEntrypointVLD

VAProfileH264Main : VAEntrypointEncSlice

VAProfileH264High : VAEntrypointVLD

VAProfileH264High : VAEntrypointEncSlice

VAProfileH264MultiviewHigh : VAEntrypointVLD

VAProfileH264MultiviewHigh : VAEntrypointEncSlice

VAProfileH264StereoHigh : VAEntrypointVLD

VAProfileH264StereoHigh : VAEntrypointEncSlice

VAProfileVC1Simple : VAEntrypointVLD

VAProfileVC1Main : VAEntrypointVLD

VAProfileVC1Advanced : VAEntrypointVLD

VAProfileNone : VAEntrypointVideoProc

VAProfileJPEGBaseline : VAEntrypointVLD

Supported encode formats are appended with the VAEntrypointEncSlice fields, and all decode formats(s) for your SKU will be listed under the VAEntryPointVLD and VAEntrypointVideoProc fields.

To interpret the output above, we can learn that the Haswell SKU above supports VAAPI – based hardware-accelerated decode for H.264 Simple, Main and Stereo High profiles (I’d assume that the Stereo High profile infers to H.264’s Multi-view coding encode mode, useful for encoding 3D Blurays and similar media, implying feature parity with Windows-based implementations where MVC encodes and decodes are supported by Intel QuickSync. Need to test that sometime).

The other arguments are pretty standard to FFmpeg and need no introduction 🙂

You may also use extra options such as QP mode (for constant-rate quality encoding) with this codec in ffmpeg as shown:

ffmpeg -loglevel debug -vaapi_device /dev/dri/renderD128 -i "input file" -vf 'format=nv12,hwupload' -map 0:0 -map 0:1 -threads 8 -aspect 16:9 -y -f matroska -acodec copy -vcodec h264_vaapi -qp 19 -bf 2 "output file"

Here, you’ll notice that we’ve added a few extra options to the arguments passed to the selected video encoder, h264_vaapi, and they are as follows:

(a). -qp: This option selects Fixed QP of P frames, and is ignored if bit-rate is set instead. Particularly useful for CRF-based encodes where a constant quality is required without bit-rate constraints. For a standard reference, a QP value of ~18 gives an approximate visual quality value similar to lossless compression, and going higher (~51) will give you way worse visual quality.

(b). -bf: This option toggles the maximum number of B-frames (bi-directional) between P-(progressive) frames. You may pump this higher than the default (2) if your selected encoder profile is High or better. Recommended: Leave this at the default (2).

In my tests, it’s also possible to do two-pass encoding with this encoder (h264_vaapi) in ffmpeg, as illustrated in the example below:

ffmpeg -loglevel debug -hwaccel vaapi -hwaccel_output_format vaapi -i "input-file" -vaapi_device /dev/dri/renderD129 -vf 'format=nv12,hwupload,scale_vaapi=w=1920:h=1080' -pass 1 -qp:v 19 -b:v 10.5M -c:v h264_vaapi -bf 4 -threads 4 -aspect 16:9 -an -y -f mp4 "/dev/null" && ffmpeg -loglevel debug -hwaccel vaapi -hwaccel_output_format vaapi -i "phfx4k.mkv" -vaapi_device /dev/dri/renderD129 -vf 'format=nv12,hwupload,scale_vaapi=w=1920:h=1080' -pass 2 -acodec copy -c:v h264_vaapi -bf 4 -qp:v 19 -b:v 10.5M -threads 4 -aspect 16:9 -y -f mp4 "output.mp4"

Let’s break that down:

With ffmpeg (and libav also), you must specify both passes sequentially (-pass 1 and -pass 2) because ffmpeg does not reiterate over input files for multiple passes. Secondly, this allows the user to tune the two-pass encoding as he/she sees fit, for example, by skipping audio processing in the first pass (-an) and only copying/muxing the audio stream from the input file’s container specification into the output file’s container (-acodec copy), as illustrated in the examples above.

And now we move on to libav’s options for a similar encode:

avconv -v 55 -y -vaapi_device /dev/dri/renderD128 -hwaccel vaapi -hwaccel_output_format vaapi -i input.mkv \

-c:a copy -vf 'format=nv12|vaapi,hwupload' -c:v h264_vaapi -bf 2 -b 12500k output.mkv

Let’s break down these arguments to their meaning:

(a) .-v : This defines avconv’s verbosity level. This one is completely optional, though its’ regarded as good practice to leave it enabled and set to a reasonable verbosity level as desired for troubleshooting and diagnostics purposes.

(b). -vaapi_device: This is important. You must select a valid VAAPI H/W context device to which you will upload textures to via hwupload, formatted in the NV12 colorspace. This points to a /dev/dri/render*_ file on your Linux system.

(c). -hwaccel: This option allows you to select the hardware – based accelerated decoding to use for the encode session. In our case above, we are picking vaapi as this has a positive impact on encoder performance. A nice freebie.

(d). -hwaccel_output_format : This option should be used every time you declare the -hwaccel method as vaapi , so that the decode stage takes place entirely in hardware. This option generates decode output directly on VAAPI hardware surfaces, speeding up decode performance significantly.

(e). -vf : This is an inbuilt libav option that allows you to specify video filter options to be passed to our encoder, in this case, h264_vaapi (Remember, we built this when we passed –enable-vaapi at the configuration stage). Here, we tell libav to convert all textures to one colorspace, NV12 (As it’s the one accepted by Intel’s QuickSync hardware encoder) and to also use hwupload, a libav intrinsic, that tells the program to asynchronously copy the converted pixel data to VAAPI’s surfaces. This argument also includes the hardware accelerated decode output format we requested earlier, raw VAAPI hardware surfaces.

(f). -bf : Specifies the bframe setting to use. Sane values for Intel ‘s Quick Sync encode hardware should be between 2 and 4. Test and report back.

(g). -c:a: Specifies the audio codec to use when transcoding the video’s audio stream. In the example given above, we use libav’s muxers to copy the audio stream as is, untouched.

(h). -c:v: Selects the video encoder to use. In this case, we selected h264_vaapi, our key point of interest here. (i). -b: Selects the video stream’s bitrate passed to the encoder, h264_vaapi.

You may see the original documentation on Libav’s website here on build instructions, using the alternate hevc_vaapi on supported hardware, encoder limitations, caveats, etc.

If all well according to plan, your video file should be encoded to H.264, muxed into the selected container and be done with.

See the screen-shot library here.

Extra information:

You can always view the build configuration of your Ffmpeg pipeline at any times by running:

For FFmpeg:

lin@mjanja:~$ ffmpeg -buildconf

ffmpeg version N-80785-g0fd76d7 Copyright (c) 2000-2016 the FFmpeg developers

built with gcc 5.3.1 (Ubuntu 5.3.1-14ubuntu2.1) 20160413

configuration: --enable-nonfree --enable-gpl --enable-version3 --enable-libass --enable-libbluray --enable-libmp3lame --enable-libopencv --enable-libopenjpeg --enable-libopus --enable-libfaac --enable-libfdk-aac --enable-libtheora --enable-libvpx --enable-libwebp --enable-opencl --enable-x11grab --enable-opengl --cpu=native --enable-nvenc --enable-vaapi --enable-vdpau --enable-ladspa --enable-libass --enable-libgsm --enable-libschroedinger --enable-libsmbclient --enable-libsoxr --enable-libspeex --enable-libssh --enable-libwavpack --enable-libxvid --enable-libx264 --enable-libx265 --enable-netcdf --enable-openal --enable-openssl --prefix=/apps/ffmpeg/git --enable-omx

libavutil 55. 27.100 / 55. 27.100

libavcodec 57. 48.101 / 57. 48.101

libavformat 57. 40.101 / 57. 40.101

libavdevice 57. 0.102 / 57. 0.102

libavfilter 6. 46.102 / 6. 46.102

libswscale 4. 1.100 / 4. 1.100

libswresample 2. 1.100 / 2. 1.100

libpostproc 54. 0.100 / 54. 0.100

configuration:

--enable-nonfree

--enable-gpl

--enable-version3

--enable-libass

--enable-libbluray

--enable-libmp3lame

--enable-libopencv

--enable-libopenjpeg

--enable-libopus

--enable-libfaac

--enable-libfdk-aac

--enable-libtheora

--enable-libvpx

--enable-libwebp

--enable-opencl

--enable-x11grab

--enable-opengl

--cpu=native

--enable-nvenc

--enable-vaapi

--enable-vdpau

--enable-ladspa

--enable-libass

--enable-libgsm

--enable-libschroedinger

--enable-libsmbclient

--enable-libsoxr

--enable-libspeex

--enable-libssh

--enable-libwavpack

--enable-libxvid

--enable-libx264

--enable-libx265

--enable-netcdf

--enable-openal

--enable-openssl

--prefix=/apps/ffmpeg/git

--enable-omx

On help and documentation:

List all formats:

ffmpeg -formats

Display options specific to, and information about, a particular muxer:

ffmpeg -h muxer=matroska

Display options specific to, and information about, a particular demuxer:

ffmpeg -h demuxer=gif

Codecs (encoders and decoders):

List all codecs:

ffmpeg -codecs

List all encoders:

ffmpeg -encoders

List all decoders:

ffmpeg -decoders

Display options specific to, and information about, a particular encoder:

ffmpeg -h encoder=mpeg4

Display options specific to, and information about, a particular decoder:

ffmpeg -h decoder=aac

Reading the results

There is a key near the top of the output that describes each letter that precedes the name of the format, encoder, decoder, or codec:

$ ffmpeg -encoders

[…]

Encoders:

V..... = Video

A..... = Audio

S..... = Subtitle

.F.... = Frame-level multithreading

..S... = Slice-level multithreading

...X.. = Codec is experimental

....B. = Supports draw_horiz_band

.....D = Supports direct rendering method 1

------

[…]

V.S... mpeg4 MPEG-4 part 2

In this example V.S… indicates that the encoder mpeg4 is a Video encoder and supports Slice-level multithreading.

Extra notes for AMD hardware supporting VCE:

If you have a supported GCN+ AMD GPU running on Linux with the mesa driver stack, you may be able to use the AMD VCE Block via VAAPI with an example such as the one shown below:

DRI_PRIME=1 LIBVA_DRIVER_NAME=radeonsi ffmpeg -hwaccel vaapi -vaapi_device /dev/dri/renderD128 -hwaccel_output_format vaapi \

-framerate 30 -video_size 1920x1200 -f x11grab -i :0.0 -f pulse -ac 2 -i 1 \

-vf 'format=nv12,hwupload' -threads 8 \

-vcodec h264_vaapi -bf 0 -acodec pcm_s16le output.mkv

Where we capture from the screen via x11grab and the audio from a pulseaudio device.

You must set the LIBVA_DRIVER_NAME and the DRI_PRIME=1 environment variables to radeonsi prior to using VAAPI on VCE, and ensure that the -vaapi_device points to the correct renderer.

Note that with AMD hardware, we generally disable B-Frame support as newer SKUs such as the RX 460/470/480 and their rebrands (Polaris-based) do not support B-Frames in H.264 encoding. See this issue on Github for more details.

Create a thumbnail image every X seconds of the video

https://trac.ffmpeg.org/wiki/Create%20a%20thumbnail%20image%20every%20X%20seconds%20of%20the%20video

-frames option

Output a single frame from the video into an image file:

ffmpeg -ss 00:00:14.435 -to 00:00:16 -i input.flv -frames:v *** out03%d.png

This example will seek to the position of 0h:0m:14sec:435msec end to 00:00:16 and output each frame (-frames:v *** (***为释放数量,根据时间和帧率计算)) from that position into PNG file.

fps video filter

Output one image every second, named out1.png, out2.png, out3.png, etc.

ffmpeg -i input.flv -vf fps=1 out%d.png

指定片段指定帧率(20/s)释放

ffmpeg -i -seek 01:12:03.25 -to 01:15:12.36 input.flv -vf fps=20 out%d.png

Output one image every minute, named img001.jpg, img002.jpg, img003.jpg, etc. The %03d dictates that the ordinal number of each output image will be formatted using 3 digits.

ffmpeg -i myvideo.avi -vf fps=1/60 img%03d.jpg

Output one image every ten minutes:

ffmpeg -i test.flv -vf fps=1/600 thumb%04d.bmp

select video filter

Output one image for every I-frame:

ffmpeg -i input.flv -vf "select='eq(pict_type,PICT_TYPE_I)'" -vsync vfr thumb%04d.png